New DPIA on EduGenAI: Dutch generative AI solution for education

Outcomes DPIA: 12 known data protection risks

Commissioned by the Dutch IT cooperative of Dutch education and research institutions SURF, Privacy Company advised on measures to prevent data protection risks and risks relating to overreliance on AI while the new service was being developed. Even though Privacy Company could not yet test the accuracy and human rights impact of the processing of personal data through the user interface and the different models, Privacy Company has mapped the different data flows of Content Data, Account Data, Diagnostic Data, Support Data and Website Data and helped identify effective privacy preserving measures.

Based on this analysis, the DPIA identifies a large number of legal, technical and organisational development goals. If SURF and the AI Hub meet all these goals and the education organisations help test the adequacy and effectivity of measures, the identified high data protection risks can all be mitigated.

Privacy Company publishes this blog about the findings with permission from SURF. See the press release and full DPIA on the website of SURF.

Functionalities EduGenAI

EduGenAI is an AI-system that provides access to multiple generative AI tools in a privacy friendly way. This includes access to open source Large Language Models (LLMs) that are hosted by SURF itself, in SURF’s own datacentre in Watergraafsmeer, but also pseudonymised access to well-known commercial LLMs in the cloud, such as the different OpenAI LLMs (as hosted on Azure by Microsoft), Llama, Mistral and Claude from Anthropic. EduGenAI will also offer access to one or more search engines to ‘update’ the generated information. The education organisations that use EduGenAI can decide whether they allow end-users to use cloud LLMs and search engine(s), or only access the on-premises LLMs.

EduGenAI enables users to ground their answers by adding documents and sources to their prompts. Users can also permanently ground their prompts with additional information by making Personae. Users can use meta prompts to make the information in a Persona available for specific purposes, such as sharing with colleagues or students.

EduGenAI is set to include many measures to both protect the privacy of users, and the rights of persons that appear in grounding documents, prompts and answers. EduGenAI aims to share as little personal data as possible with the external cloud LLMs.

EduGenAI is scheduled to include many measures in the user interface to prevent overreliance on AI, that is, that users have too much trust in the accuracy and reliability of the answers and forget to check.

Users can choose what AI-system they want to use to generate information, and switch, even during a prompt dialogue, while EduGenAI protects their privacy, and the rights of persons mentioned in the prompt dialogue, by applying a filter to mask personal data.

Privacy controls

EduGenAI is designed to implement many privacy by design and privacy by default measures. Some key planned highlights are:

- Strip all metadata (IP-addresses, cookies, identifiers) from the user queries.

- Apply a personal data masking filter to the contents of queries.

- Allow the education organisations to determine what LLMs can be accessed (only on-premises, or also cloud LLMs).

- Store the chat history by default on the end user device, and not centrally on SURF’s servers.

GDPR roles

SURF will offer a data processing agreement to the collaborating education organisations (COs) for 4 of the 5 identified categories of personal data: for the Account Data, the Diagnostic Data, the Support Data and the Website Data. However, because the education sector is a very unique sector, in which the aim is to learn from each other, SURF has opted for joint controllership between the COs and EduGenAI when it comes to the substantive quality of the service. SURF will be working closely with the institutions participating in the pilot to investigate whether the proposed measures are effective and which language models are most suitable for different tasks.

Outcome: 12 low data protection risks

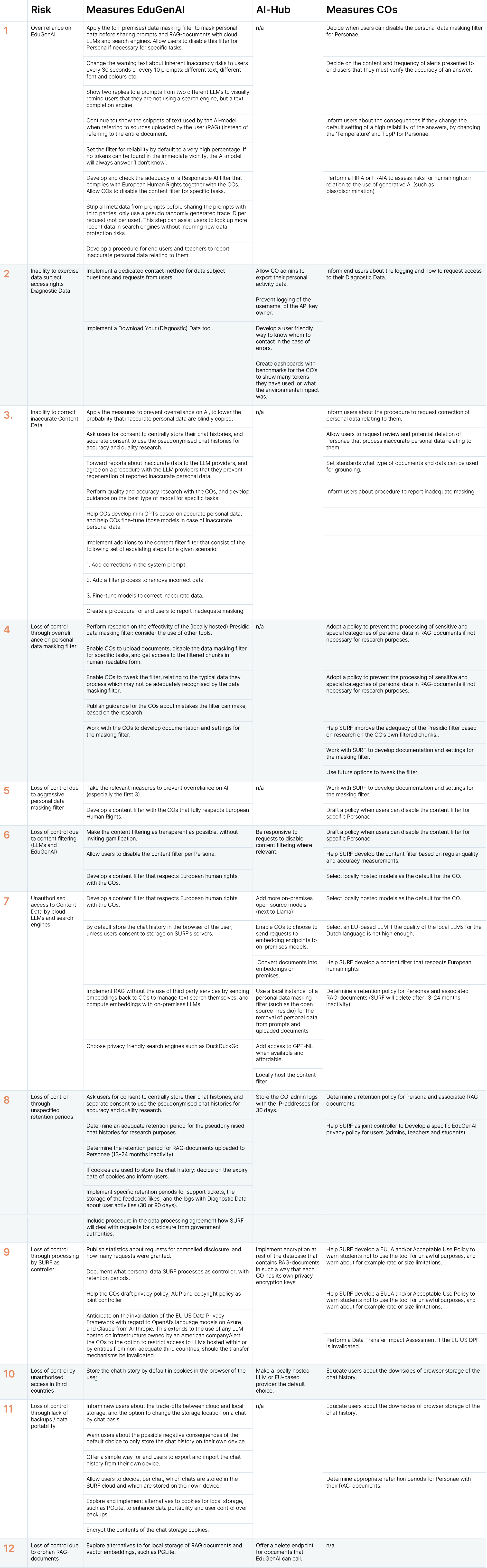

The outcome of this DPIA is that, provided all development goals are effectively implemented and subsequently tested, SURF and the education organisations can take effective measures to lower or mitigate the identified 12 data protection risks. The recommended measures are listed in the (long) table: