Update DPIA on Microsoft 365 Copilot for education: amber lights

Outcomes Update DPIA: 2 medium and 9 low data protection risks

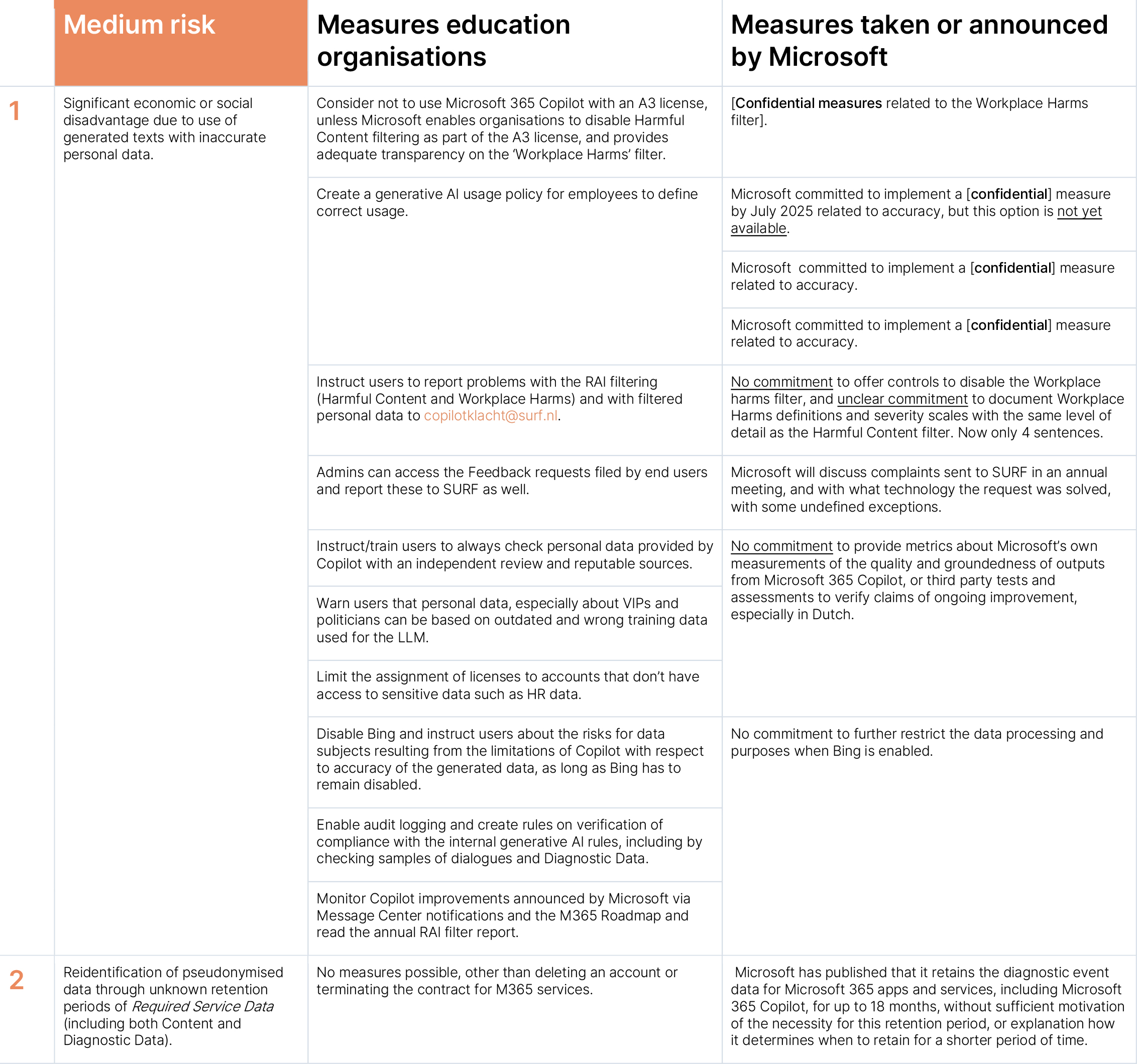

Microsoft has taken or announced measures to mitigate the 4 previously identified high risks. Two risks are now rated as medium/amber instead of high/red. These risks relate to the processing of inaccurately and incompletely generated personal data in the replies, and with the excessive retention period of 18 months for the pseudonymised metadata, the Required Service Data and Telemetry Data.

SURF advice: Exercise restraint in deployment and weigh up considered use

This outcome means that educational organisations can consider limited use of the service. However, they first must take many precautions. Microsoft’s mitigating measures are not yet enough, or cannot yet be verified, to assess two of the former high risks as low, and the other ‘low’ risks are only low if the education organisations take the recommended measures. Absent more information, and options to disable or tweak the RAI-filtering (both Harmful Content and the new Workplace Harms filter) and while waiting for Microsoft’s announced improvements to the user interface, these two risks are qualified as medium.

New assessment after half a year

SURF aims to evaluate in half a year, once Microsoft has implemented announced measures. Pending the outcomes of the continuing dialogue with Microsoft education organisations must take into account that the medium risks may be reassessed as high, if Microsoft take insufficient mitigating measures.

Measures education organisations must take

SURF calls on education organisations that start using Microsoft 365 Copilot to share a copy of all complaints about inaccurate personal data, including complaints about incorrect filtering of data. This assessment with 2 amber lights puts a serious responsibility on the education organisations to assess for what work and learning tasks they can permit the use of Copilot without causing unacceptable data protection risks. Amongst others, they must draft clear policies about the use of generative AI, follow the recommendations for privacy friendly settings (including the blocking of access to Bing), and seriously monitor the quality of work generated with Copilot. The full list of measures is in the table below.

Company publishes this blog about the findings with permission from SURF. See the press release, Update DPIA and initial DPIA from 17 December 2024 on the website of SURF.